Groq

Founded Year

2016Stage

Unattributed | AliveTotal Raised

$2.503BLast Raised

$1.5B | 2 mos agoMosaic Score The Mosaic Score is an algorithm that measures the overall financial health and market potential of private companies.

+107 points in the past 30 days

About Groq

Groq specializes operates as an AI inference technology within the semiconductor and cloud computing sectors. The company provides computation services for AI models, ensuring compatibility and efficiency for various applications. Groq's products are designed for both cloud and on-premises AI solutions. It was founded in 2016 and is based in Mountain View, California.

Loading...

Groq's Product Videos

ESPs containing Groq

The ESP matrix leverages data and analyst insight to identify and rank leading companies in a given technology landscape.

The AI inference processors market develops specialized chips for efficiently executing pre-trained AI models in real-time applications. These processors prioritize low latency and energy efficiency, making them essential for tasks such as image recognition, natural language processing, and recommendation systems in devices such as smartphones, robotics, and autonomous vehicles. The market is expa…

Groq named as Leader among 15 other companies, including Advanced Micro Devices, Samsung, and IBM.

Groq's Products & Differentiators

GroqRack™

For data center deployments, GroqRack provides an extensible accelerator network. Combining the power of an eight GroqNode™ set, GroqRack features up to 64 interconnected chips. The result is a deterministic network with an end-to-end latency of only 1.6µs for a single rack, ideal for massive workloads and designed to scale out to an entire data center.

Loading...

Research containing Groq

Get data-driven expert analysis from the CB Insights Intelligence Unit.

CB Insights Intelligence Analysts have mentioned Groq in 5 CB Insights research briefs, most recently on Jan 28, 2025.

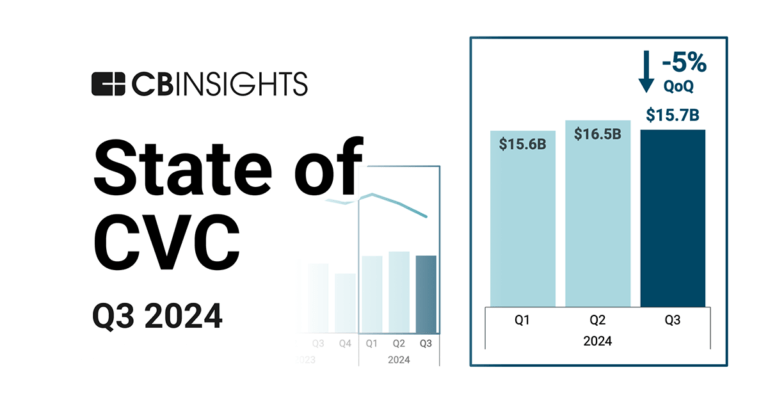

Oct 31, 2024 report

State of CVC Q3’24 Report

Sep 13, 2024

The AI computing hardware market mapExpert Collections containing Groq

Expert Collections are analyst-curated lists that highlight the companies you need to know in the most important technology spaces.

Groq is included in 4 Expert Collections, including Unicorns- Billion Dollar Startups.

Unicorns- Billion Dollar Startups

1,270 items

Semiconductors, Chips, and Advanced Electronics

7,321 items

Companies in the semiconductors & HPC space, including integrated device manufacturers (IDMs), fabless firms, semiconductor production equipment manufacturers, electronic design automation (EDA), advanced semiconductor material companies, and more

AI 100 (2024)

100 items

Artificial Intelligence

7,221 items

Groq Patents

Groq has filed 84 patents.

The 3 most popular patent topics include:

- parallel computing

- computer memory

- instruction set architectures

Application Date | Grant Date | Title | Related Topics | Status |

|---|---|---|---|---|

9/20/2021 | 3/11/2025 | Grant |

Application Date | 9/20/2021 |

|---|---|

Grant Date | 3/11/2025 |

Title | |

Related Topics | |

Status | Grant |

Latest Groq News

Mar 13, 2025

3nm赛道,挤满了ASIC芯片 Marvell预计第一财季销售额约为18.8亿美元,同比增长27%。其中,AI业务收入达7亿美元左右,主要是亚马逊等客户定制ASIC等产品需求增长的带动。 ASIC,越发丰富 DeepSeep之风正盛,将全球科技产业的重心从训练推向推理阶段。由于DeepSeek是MOE模型,能够实现更低的激活比。 算力 、内存、互联原有平衡发生剧变,新算力架构机会再次进入同一起跑线。 这种条件下,定制化 芯片 ASIC似乎越来越适合AI时代。 TPU 如果把TPU比作一个工厂,这个工厂的任务是把两堆数字(矩阵)相乘。每个工人(脉动阵列的小格子)只需要做简单的乘法和加法,然后把结果传给下一个工人。这样,整个工厂就能高效地完成任务,而且速度比普通的工厂(比如 CPU 或GPU)快很多。 DPU DPU就像是一个快递中心,它负责接收包裹(数据),快速分拣,然后把包裹送到正确的地方。它有自己的小助手(加速器),这些小助手很擅长处理特定的任务,比如快速识别包裹的地址或者检查包裹是否完好。这样,DPU就能让整个快递系统(数据中心)运行得更高效。 DPU的优势是可以卸载CPU的部分任务,减少CPU的负担。优化了 数据传输 路径,从而提高系统的整体效率。所以,它的应用场景是数据中心的网络加速、存储管理、安全处理等。 NPU 再来看NPU。NPU是专门为神经网络计算设计的芯片,采用“数据驱动 并行计算 ”的架构。它能够高效执行大规模矩阵运算,特别是 卷积神经网络 (CNN)中的卷积操作。 如果把NPU比作一个厨房,这个厨房有很多厨师(计算单元),每个厨师都能同时做自己的菜(处理数据)。比如,一个厨师负责切菜,一个厨师负责炒菜,另一个厨师负责摆盘。这样,整个厨房就能同时处理很多道菜,效率非常高。NPU就是这样,通过 并行处理 ,让神经网络的计算变得更快。 NPU的优势就是执行AI任务时功耗较低,适合边缘设备。并且,专为神经网络设计,适合处理 深度学习 任务。所以,NPU的应用场景是 人脸识别 、语音识别、 自动驾驶 、 智能相机 等需要进行深度学习任务的领域。 简而言之,TPU适合深度学习、DPU适合数据中心的数据管理、NPU通过并行计算快速完成神经网络任务,适合各种AI应用。 最近,还出现了LPU,一种专门为处理语言任务而设计的芯片。它的推出就是专门针对语言处理优化的架构和 指令集 ,能够更高效地处理文本、语音等数据,从而加速大语言模型的训练和推理过程。 摩根士丹利预测AI ASIC的总可用市场将从2024年的120亿美元增长到2027年的300亿美元,期间 英伟达 的AI GPU存在强烈的竞争。 现在,在ASIC赛道上的玩家,已经越来越多。 之后,AWS又发布了当前的Trainium 2,采用5nm工艺。单个Trainium 2芯片提供650 TFLOP/s的BF16性能。Trn2实例的能效比同类GPU实例高出25%,Trn2 UltraServer的能效比Trn1实例高三倍。 去年12月,亚马逊宣布要推出全新 Trainium3,采用的是3nm工艺。与上代 Trainium2 相比,计算能力增加2倍,能源效率提升40%,预计2025年底问世。 据了解,在AWS的3nm Trainium项目中,世芯电子(Alchip)和Marvell展开了激烈的竞争。 世芯电子(Alchip)是第一家宣布其3nm设计和生产生态系统准备就绪的ASIC公司,支持 台积电 的N3E工艺。Marvell则在Trainium 2项目中已经取得了显著进展,并有望继续参与Trainium 3的设计。 当前的竞争焦点在于:后端设计服务和CoWoS产能分配上。看谁能够在Trainium项目争取到更多的份额。 之前我们提到的TPU,以谷歌的TPU最具有代表性。谷歌的TPU系列芯片从v1到最新的Trillium TPU。TPU为Gemini 2.0的训练和推理提供了100%的支持。据谷歌这边说,Trillium 的早期客户AI21 Labs认为是有显著改进的。AI21 Labs首席技术官Barak Lenz表示:“Trillium在规模、速度和成本效益方面的进步非常显著。”现在谷歌的TPU v7正在开发阶段,同样采用的是3nm工艺,预计量产时间是在2026年。 从合作对象来说,谷歌和博通始终是在深度合作的。谷歌从TPU v1开始,就和博通达成了深度合作,它与博通共同设计了迄今为止已公布的所有TPU,而博通在这方面的营收也因谷歌水涨船高。 微软 在ASIC方面也在发力。Maia 200是微软为数据中心和AI任务定制的高性能加速器,同样采用3nm工艺,预计在2026年进入量产阶段,至于现在Maia 100,也是专为在Azure中的大规模AI工作负载而设计。支持大规模并行计算,特别适合自然语言处理(NLP)和生成式AI任务。从现在的信息来看,这款产品微软选择和Marvell 合作。 早在今年1月就有消息传出,美国推理 芯片公司 Groq已经在自己的LPU芯片上实机运行DeepSeek,效率比最新的H100快上一个量级,达到每秒24000token。值得关注的是,Groq曾于2024 年12月在沙特阿拉伯达曼构建了中东地区最大的推理集群,该集群包括了19000个Groq LPU。 Open AI首款AI ASIC芯片即将完成,会在未来几个月内完成其首款内部芯片的设计,并计划送往台积电进行制造,以完成 流片 (taping out)。最新消息是,OpenAI会在2026年实现在台积电实现量产的目标。 ASIC真的划算吗? 先从性能上来看,ASIC是为特定任务定制的芯片,其核心优势在于高性能和 低功耗 。在同等预算下,AWS的Trainium 2可以比英伟达的H100 GPU更快速完成推理任务,且性价比提高了30%~40%。Trainium3计划于2025年下半年推出,计算性能提高2 倍,能效提高40%。 并且,GPU由于架构的特性,一般会在AI计算中保留图形渲染、视频编解码等功能模块,但在AI计算中这些模块大部分处于闲置状态。有研究指出,英伟达H100 GPU上有大约15%的 晶体管 是未在AI计算过程中被使用的。 从成本上来看,ASIC在大规模量产时,单位成本显著低于GPU。ASIC在规模量产的情况下可以降至GPU的三分之一。但一次性工程费用NRE(Non-Recurring Engineering)非常高。 以定制一款采用5nm制程的ASIC为例,NRE费用可以高达1亿至2亿美元。然而一旦能够大规模出货,NRE费用就可以很大程度上被摊薄。

Groq Frequently Asked Questions (FAQ)

When was Groq founded?

Groq was founded in 2016.

Where is Groq's headquarters?

Groq's headquarters is located at 301 Castro Street, Mountain View.

What is Groq's latest funding round?

Groq's latest funding round is Unattributed.

How much did Groq raise?

Groq raised a total of $2.503B.

Who are the investors of Groq?

Investors of Groq include Kingdom of Saudi Arabia, KDDI, Neuberger Berman, Cisco, Samsung Catalyst and 19 more.

Who are Groq's competitors?

Competitors of Groq include Ampere, Blaize, Furiosa AI, Tenstorrent, NeuReality and 7 more.

What products does Groq offer?

Groq's products include GroqRack™ and 4 more.

Loading...

Compare Groq to Competitors

Cerebras focuses on artificial intelligence (AI) acceleration through its development of wafer-scale processors and supercomputers for different sectors. The company provides computing solutions that support deep learning, natural language processing, and other AI workloads. Cerebras serves industries including healthcare, scientific computing, and financial services with its AI supercomputers and model training services. It was founded in 2016 and is based in Sunnyvale, California.

Tenstorrent is a computing company specializing in hardware focused on artificial intelligence (AI) within the technology sector. The company offers computing systems for the development and testing of AI models, including desktop workstations and rack-mounted servers powered by its Wormhole processors. Tenstorrent also provides an open-source software platform, TT-Metalium, for customers to customize and run AI models. It was founded in 2016 and is based in Toronto, Canada.

Wave Computing focuses on AI-native dataflow technology and processor architecture in the technology sector. The company provides products and solutions for deep learning and artificial intelligence applications, applicable to devices from edge to datacenter environments. Wave Computing's MIPS division offers licensing for processor architecture and core intellectual property. It is based in Campbell, California.

Mythic is an analog computing company that specializes in AI acceleration technology. Its products include the M1076 Analog Matrix Processor and M.2 key cards, which provide power-efficient AI inference for edge devices and servers. Mythic primarily serves sectors that require real-time analytics and data throughput, such as smarter cities and spaces, drones and aerospace, and AR/VR applications. Mythic was formerly known as Isocline Engineering. It was founded in 2012 and is based in Austin, Texas.

Westwell Lab specializes in autonomous driving solutions and operates within the bulk logistics industry. The company integrates artificial intelligence with new energy sources to improve operations in global logistics. Westwell Lab serves sectors such as seaports, railway hubs, dry ports, airports, and manufacturing facilities. It was founded in 2015 and is based in Shanghai, Shanghai.

ChipIntelli operates in the intelligent voice chip industry and focuses on providing solutions for more natural, simple, and smart human-machine interactions. The company offers a range of intelligent voice chips and solutions that cater to various applications, including offline voice recognition and voice-enabled smart devices. ChipIntelli's products are primarily used in the smart home appliances, smart lighting, smart automotive, and smart education/entertainment sectors. It was founded in 2015 and is based in Chengdu, China.

Loading...